This article first appeared in the Autumn 2025 issue of 2600 The Hacker Quarterly

It started with an Executive Order issued on August 6, 2020, by President Trump that sought to ban American companies or persons from doing business with TikTok’s parent company ByteDance or any of its subsidiaries. This is ostensibly because ByteDance is a company in the People’s Republic of China which posed a security threat to the United States. Not long after, on August 14, 2020, Trump issues a second Executive Order, this time directing ByteDance to divest all operations in the United States in 90 days. This is the actual first attempt at a ban of TikTok in the United States.

This results in TikTok suing the Trump administration for violation of due process in its executive orders.

Joe Biden is elected president in November of that year and shortly into his term in February 2021, he brings to a halt Trump’s plan to ban TikTok by postponing the legal cases that were working their way through the courts.

Things were pretty quiet about a TikTok ban for a good while, but there were controversies about the app, such as the data it collected and behavior of the algorithm.

Then on December 2, 2022, during a talk at Michigan University’s Ford School of Public Policy FBI Director Christopher Wray raises concerns that the Chinese Government can use the recommendation algorithm of TikTok to manipulate content for influence operations. Among the things he said here was “… so all of these things are in the hands of a government that doesn’t share our values, and that has a mission that’s very much at odds with what’s in the best interests of the United States…” Now remember this quote. Among all the scare tactics of invasions of privacy and potential for espionage is this one truth.

People in the United States government object to the content shared on TikTok. The speech presented by the app and the algorithm. For if it was about data harvesting as they claim, the Chinese-owned apps Temu and Shein are much worse in regard to that behavior bet they sell goods, they don’t provide content. Any bans so far have overlooked these companies and others from other countries or even domestically that harvest and sell our data. Surveillance Capitalism, the driving economic force of the Internet, has data brokering as its foundation.

In this vein of sharing user data with the Chinese government in February of 2022, both the FCC and FBI warn of this possibility, and the White House orders that TikTok is to be deleted from all government-issued devices.

The next move by the United States government was when over a year later, on March 23, 2023 TikTok CEO Shou ZI Chew is brought before a congressional committee for almost 6 hours of Sinophobia (though Chew is from Singapore, and TikTok at the time was based in Los Angeles and Singapore, and not available in China), misunderstanding of technology, and unfounded accusations of connection to and control of the CCP that echo and expand on Wray’s comments four months earlier.

Legislation is put forward to ban TikTok, but it fails to find support in the congress for many months until a year later, in March of 2024, the House of Representatives passes the TikTok sell-or-ban bill. In April, the Senate does the same and when it was delivered to President Biden’s desk he signed the legislation making it law. TikTok and ByteDance sue the Federal government on First Amendment grounds and both a court of appeals and the Supreme Court uphold the law. By law, TikTok is banned as of January 19, 2025.

So what happened between March of 2023 and March of 2024 that overcame the initial resistance to ban the app, making it the law of the land? The answer lies in a historical event that happened in late 2023 and the coverage of what came after on TikTok. This is the Hamas attack on Israel on October 7, 2023, and Israel’s genocidal response to that attack.

It’s not often talked about, but the United States Economy is driven by war. The United States spends more on their military than the rest of the world spends on theirs combined. America’s defense industry, when you count contractors and manufacturers of arms and military equipment, is the largest employer in the country. This is the Military-Industrial complex that Eisenhower warned the people of in his farewell address of January 17, 1961. If the American Empire is not directly fighting in conflicts, it will often provide or sell arms to its allies and proxies. The United States has a long history of supporting Israel and the Zionist project on which it is founded. Under President Joe Biden, American weapons and

American foreign policy made possible a genocide of the Palestinian people.

The American government’s position in the Palestian genocide was in support of the genocide. This was official American policy to support Israel unconditionally, even contravening both domestic and international laws to do so.

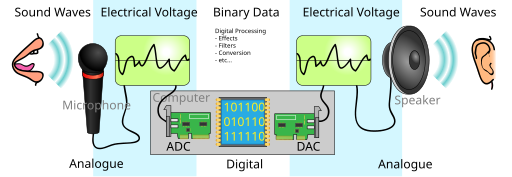

American mass media toed the line, and a pro-Israel / anti-Palestine narrative was the norm in print and television. There was no nuance in the discussions, with people taking binary positions with no room for actual discussion or the human cost. (See my previous article in the Spring 2024 issue)

However, on TikTok, a different picture of the conflict was being made. Palestinian creators could share their lived experiences directly, without being filtered through Israeli Hasbara (explanations/propaganda) these videos were shared widely, and how the TikTok algorithm works, many people were exposed to the genocide directly without the governments supporting the eradication of a people putting their spin and justification of it.

This was the real concern of Democrats and Republicans both, that young people mostly were getting a narrative that was, in the words of Director Wren, “very much at odds with what’s in the best interests of the United States [Government]” on a platform they did not control. Other social media platforms were compliant with cooperating with the interests of the American government. Meta, for example, suppressed posts on Instagram and Threads by Palestinians or those who had pro-Palestinian stances. But on TikTok, there was an unhindered view of Palestinian suffering and resistance.

The TikTok ban was always conditional. It was a strong-arm tactic for ByteDance to divest their ownership in favor of American ownership. An American that they hoped would be more on board with American narratives.

Well, ByteDance never divested, and in the waning days of the Biden administration, the ban went into effect, making TikTok (and other Apps owned by ByteDance, such as the Marvel Snap game) unavailable in the United States. For about a day, The following day, American TikTok users were greeted with a message that thanks to incoming President Trump, there was an agreement to keep TikTok active in the United States.

If there is one thing we know about Trump, he doesn’t make any deals from which he doesn’t profit or get something of value. This new post-ban era of TikTok is operating (illegally) under the good graces of Trump. It now is doing business so as it does not upset the powers that be, and now is under the thumb of the United States Government. The app has even returned to the Google Play Store and Apple App Store as of this writing.

All levels of Government are ignoring that TikTok is operating illegally according to a law passed by Congress, signed by the President, and upheld by the courts. And this small thing is done to normalize this. TikTok is widely popular, and the Ban as censorious and wrong as it is is widely unpopular. If a law were to be ignored, this is a wily choice for the first one. And make no mistake, this ignoring of a law and court ruling on the first day of the Trump administration is a first one, one I predict of many.

As of the writing of this article in the first week of March, 2025, the actions of Elon Musk’s DOGE are being overturned in the courts, with decisions saying they are clearly breaking the law, and the general consensus is waiting to see if the Executive branch complies with the courts. My prediction is that the Trump Administration will continue with lawlessness. Ignoring any statute or court opinion contrary to their agenda.

And now 2 weeks later, working on a second draft of this article, the Trump administration has targeted legal residents (Green Card holders) who hold pro-Palestinian views for deportation, attempting to skip over the usual due process afforded Green Card holders, and branding them criminals and terrorists for not supporting the American-funded genocide by Israel against the Palestinian people in Gaza. The first being Mahmoud Khalil, who is not charged with any crime unless you imagine that we live in a time where thoughtcrime is prosecutable. Others have now followed.

And this is how it starts. Authoritarians will begin with things that are actually popular. Like ignoring a law that would keep people from their favorite app. Persecuting a human group that at most makes up 1.4 % of the population, such as passing a law that affects less than 10 college athletes out of over 510,000. Fascism starts small to make bigger moves later. It’s “just” ignoring an unpopular ban before other laws, laws that protect the vulnerable, get ignored. It’s “just” persecuting trans people, until they use the same mechanisms to persecute other human groups, maybe even one you find yourself in.

Shout Outs: Sista, Owlerine, Raincoaster, Cosmic Surfer